Abstract

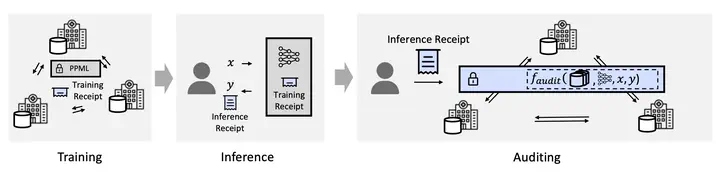

Recent advancements in privacy-preserving machine learning are paving the way to extend the benefits of ML to highly sensitive data that, until now, have been hard to utilize due to privacy concerns and regulatory constraints. Simultaneously, there is a growing emphasis on enhancing the transparency and accountability of machine learning, including the ability to audit ML deployments. While ML auditing and PPML have both been the subjects of intensive research, they have predominately been examined in isolation. However, their combination is becoming increasingly important. In this work, we introduce Arc, an MPC framework for auditing privacy-preserving machine learning. At the core of our framework is a new protocol for efficiently verifying MPC inputs against succinct commitments at scale. We evaluate the performance of our framework when instantiated with our consistency protocol and compare it to hashing-based and homomorphic-commitment-based approaches, demonstrating that it is up to 104× faster and up to 106× more concise.